Open Source Video Editing

07 Aug 2020 - zach

Contents

- The problem

- Studying Chinese in college

- A request for help

- Previous work

- High school: Bēowulf

- CHIN 101

- Qingyang documentary

- ENGL 314

- Concept

- Writing

- Principal photography

- Equipment

- Lighting

- Kdenlive

- MLT

- Editing

- Proxy editing

- GPU rendering

- Preview rendering

- Rendering

- Conclusion

The problem

Studying Chinese in college

Studying Chinese was important to me in college. It was a welcome respite from a computer science curriculum that would present me with a wide variety of different subjects with different instructors every semester, often unrelated to anything I had studied the semester before. In contrast, I knew all of the Chinese faculty. And every class built on what I had learned in the previous semester incrementally.

Throughout high school, I had wanted to learn Chinese and study abroad in China. In college, I worked hard in my Chinese classes, and the Chinese Studies department offered me opportunities to fulfill those goals. I spent a full year in Lanzhou (兰州) on a scholarship. After I returned, I was one of only a handful of students who advanced to taking 400-level classes that were taught in Chinese. It’s the only thing I wanted to do in high school that I actually did. I am proud of those accomplishments, but I must recognize that I never would have succeeded without the dedication and opportunities offered to me by the faculty of the Chinese studies department.

A request for help

On 7 June, Dr Li sent an e-mail to me and several other advanced alumni, explaining a problem that had recently befallen the Chinese studies department: that the number of sections of CHIN 101 had been cut due to low enrollment.

In any given year, the department might operate 3 sections of CHIN 101, 1–2 sections of CHIN 201, and never more than 1 section of CHIN 301. Moreover, in addition to the smaller section size, half the students in 301 were not language learners, but were Chinese students. (They gave us a more immersive learning environment in exchange for easy credits.) In total, I estimate that less than 8% of students who start in 101 make it to 301. Fewer still feed the more advanced classes. Thus, any reduction in the base of this pipeline would threaten the very existence of the advanced classes. Dr Li asked each of us to produce a short video testimonial to encourage prospective students to take CHIN 101.

I owe my success to the dedication of the Chinese studies faculty. And given how much it meant to me when I was in college, I was appalled at the idea that students in the future would not have as many opportunities as I did. Moreover, given the volume of students who study foreign languages at ISU, I don’t understand why more students don’t study languages more interesting than Spanish and French.

Over the years, I have heard many myths about the supposed difficulty of learning Chinese—and especially Chinese characters. I decided that making a video dispelling these misconceptions, and giving a genuine testimonial along with a survey of the potential opportunities would be more than enough encouragement.

While they probably just expected me to talk into a webcam for two minutes, I figured I owed the faculty—and students like me—something more interesting.

Previous work

For years, I have had a side hobby of producing mediocre video.

High school: Bēowulf

As a high school sophomore, I became very interested in Old English when we studied Bēowulf. For the final project of the unit, my class was split into groups. Each group was charged with producing a video dramatization of a scene from Bēowulf.

We were instructed to dramatize the scene where Bēowulf is killed by the dragon. I played Bēowulf. I procured costumes from my parents’ days at Renaissance fairs: a full set of chain mail, steel and leather chest armor, a steel shield, swords, and axes. A friend procured the pyrotechnics for my real firefight with the dragon, in which generous amounts of fake blood were used to depict my tragic demise. I, of course, wrote the script in Old English with dialogue extracted from the original poem.

The final result was… interesting. I’m quite certain my teacher had never seen anything like it.

“Ic geneðde fela guða on geogoðe; gyt ic wylle, frod folces weard, fæhðe secan, mærðu fremman!”

CHIN 101

For CHIN 101 in Fall 2014, we were assigned to produce a video as a midterm project. While the requirements could have been easily fulfilled by simply taping two group members having a fake conversation in Chinese in the library, I decided that something more interesting was called for.

I handled the entire production, from writing the script to sourcing equipment. I wrote a script that was basically the college freshman version of The X-Files. Crafting the plot took some measure of creativity since all I had learned by that point was how to count, talk about immediate family members, and describe basic activities.

An important innovation that I had not tried before was to break down the entire script into every required shot. This made principal photography much easier.

But editing the video was a challenge. I had no experience with video editing of any kind, and, due to several constraints, I had to edit the video and learn how to do it all in one sitting.

But it was a success. My group got an A, and the video was generally popular.

I had only ever heard of Adobe Premiere Pro, so that’s what I used for editing. Fortunately, it wasn’t that hard to learn. Unfortunately, it’s proprietary. I know that I could have ‘free’ access to the entire Adobe Creative Cloud while I was a student, but I don’t like this model. If I get used to this very expensive proprietary software, then what would I do when I graduate?

This discouraged me from video editing for a while, but I had learned that video production was within my reach. And, eventually, my skills would come in handy again.

Qingyang documentary

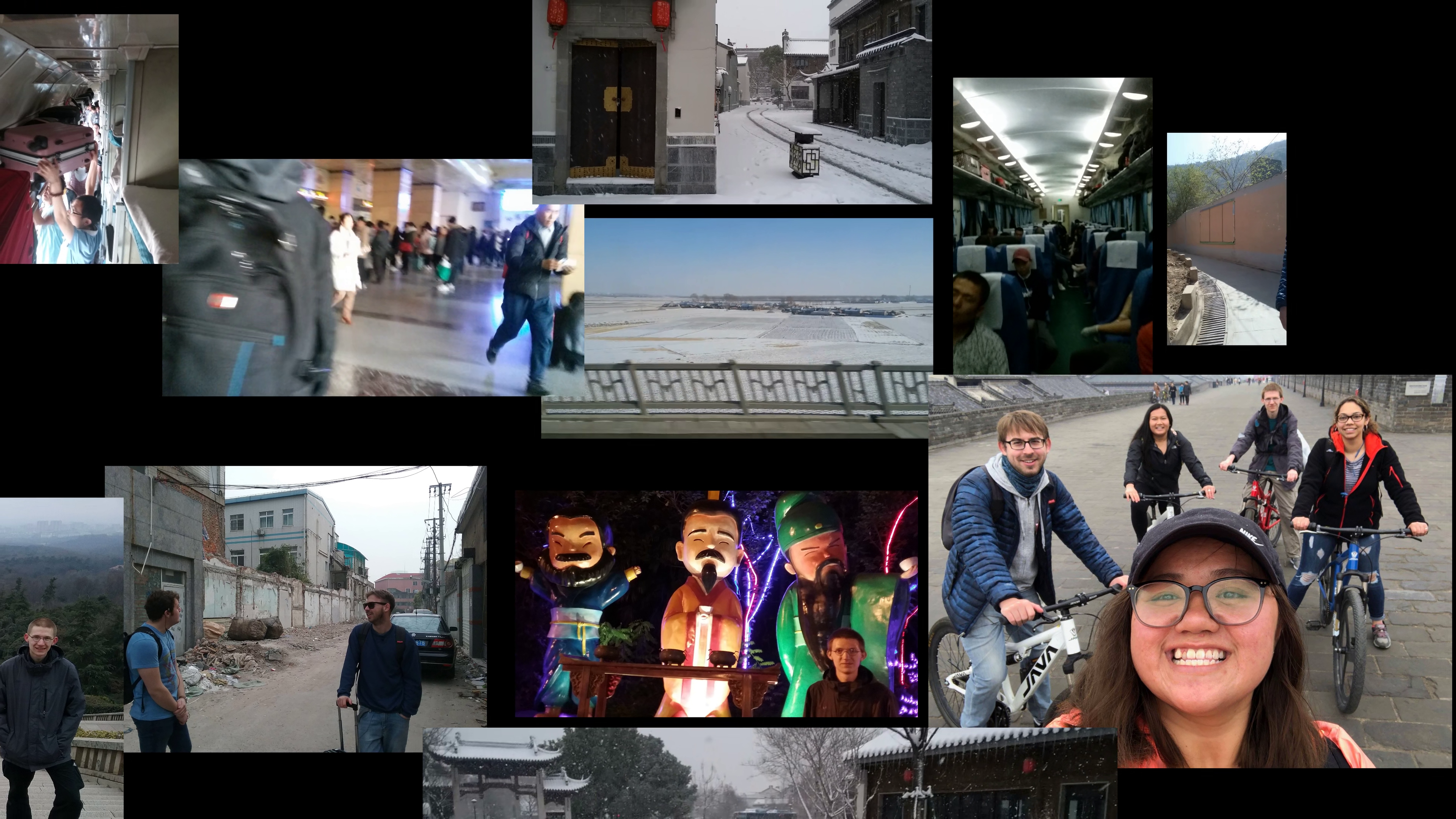

From 2017-2018, I spent a year in Lanzhou, studying at Lanzhou Jiaotong University (兰州交通大学) with seven other students from ISU. In winter 2017, our host university offered us an opportunity that would allow us to see more of China, improve our Chinese, and really immerse ourselves in Chinese culture (and, likely, further our host university’s local political objectives). They wanted to send us to teach English at a school in a town called Qingyang (庆阳) in the eastern part of our province. They wanted to send us one at a time, each for two weeks. I was the second to go.

It was fantastic. I got to see a part of Chinese society that I never thought I would get to see in person, and my Chinese improved noticeably. It was great to talk to children because I could actually understand what they were saying most of the time.

After I came back, Professor Wu (吴老师) asked me to create a video about our experiences. I agreed, and then tried to figure out exactly how I was going to do that.

By this time I was in transition between Windows and Ubuntu Linux. In response to my general distrust of expensive proprietary software, I sought a free and open-source video editor. Fortunately, I found Shotcut.

I decided to produce a ‘documentary’. Since I didn’t have much video from Qingyang itself, I decided to drive the narrative using interviews that I conducted with my fellow students who had also gone to Qingyang. After principal photography, I got to work.

The editing experience in Shotcut was pretty good. The only problem that I had was that I edited in both Windows and Ubuntu. Shotcut uses absolute file paths for clips and other files, which are different in Windows and Ubuntu. Every time I switched between the two, I had to go into the XML save file and replace C:\... with /home/zjwatt/... or vice versa. Eventually, I accidentally corrupted the save file and was no longer able to edit it. Fortunately, this didn’t happen until after it was finished.

I think it took me more than two weeks to edit, and Professor Wu didn’t think that I was actually going to produce a video. But when I was done, Everyone loved it. It was informative, humorous, and included an emotional arc—something I hadn’t initially intended. Overall, it was all right for something that I had just put together on the fly.

I learned two lessons about equipment in this documentary:

- A phone camera is more than adequate for most intents and purposes. The only problem is that if you don’t have a way to mount the phone, your life will be difficult.

- After principal photography, I discovered that the acoustic environment of our all-concrete-and-hard-tile dorm was abysmal. It was almost impossible to hear some of my subjects, and the final video must be viewed with subtitles. External microphones are necessary.

ENGL 314

In Fall 2019 I took ENGL 314: Technical Writing. One of our projects that semester was to write a proposal document for some technical project. To prepare students for a world of multimedia, the department also required us to make a video explaining our project. Our instructor told us that just talking into a webcam would suffice, but I have standards.

I proposed to open the system of ventilation tunnels beneath ISU to pedestrian traffic. It wasn’t a good idea, so to bolster its appeal, I decided to interview a man internationally renowned for his expertise: Zachariah Watt.

The video was so funny that my instructor told me he couldn’t give me anything lower than an A.

It was the second time I had used Shotcut, but I was disappointed in how hard it was to set up again. To ensure that it could take full advantage of my hardware, I tried to compile it from source. This was astonishingly difficult, and in the end it didn’t work, so I had to use the Snap version anyway.

Concept

Producing this video would be different. Not only did this take place during the summer, but it was during the COVID-19 pandemic. This took a lot of options off the table. So I decided to take a different approach. I would produce a video of just me talking to the camera.

Making it good would necessitate innovation, both in principal photography and in editing. These innovations would have to focus on ensuring that video of my face wouldn’t look terrible, and on forcing the audience to stare at my face as little as possible.

Writing

Writing is always the hardest part of any project. There were so many things I wanted to cover, but and I had to make sure that the script flowed and made sense, and that wasn’t possible with every combination of content. Overall, I think it took me the better part of a month to finish.

Principal photography

Other than the discovery that I just can’t deliver lines for the life of me, principal photography was successful. I got everything filmed within a couple of days, and I made several improvements over previous videos in both equipment and technique.

Equipment

I based my equipment on lessons from the Qingyang documentary.

In the Qingyang documentary, I found that a phone camera was perfectly sufficient. And the phone that I had in the Summer of 2020 was much better than the one I had in Qingyang. This time, however, I saved myself some trouble and ordered a tripod.

Although my sound environment was generally better than in Qingyang, I knew from experience that a built-in microphone is not good enough for video production. I ordered a cheap lavaliere microphone and it exceeded my expectations.

Lighting

I first learned about lighting in COM S 437 when we learned about shaders in MonoGame. Lighting is complicated. Lighting experts study for years and can astronomically improve the quality of video production with their skill. But when I learned about the standard lighting rig, I realized that there are simple techniques that ordinary people can use to improve the quality of their videos.

- The Sun (Key). Unless I blacked out my windows, the Sun would have to be my key light. The main problem was that the sun varies so much across the course of a day. I tried to conduct all photography around the same time of day, but as you can see in the video, I didn’t do it well enough. Some segments are obviously filmed at different times.

- A lamp with a bedsheet as a bounce card (Fill). I tried to use a bulb with a spectrum similar to that of the sun. The best I had was a grow light from my hydroponics setup. I had to use an improvised bounce card to diffuse and broaden the light source.

- The rest of the room (Back). The paint in the room was pretty bright and well-illuminated, so I didn’t need to do anything specific for a back light.

I was pretty jarred by the results. My face looked strange. I’m not sure that I liked the look, but it wasn’t exactly terrible. I solicited feedback, and no one complained about it. In any case, I do think it was an improvement over my older, poorly-illuminated videos.

Kdenlive

I decided to try Kdenlive as a new video editor.

I also decided to educate myself. In most of my projects, ignorance is the most common cause of problems and anxiety. I decided to look into how open-source video editors work in an effort to head off any technical problems I might encounter.

MLT

The Media Lovin’ Toolkit multimedia framework (MLT) was written for television and broadcasting. Its API provides a framework for modifying and adding effects to audio and video. It is used by video editors, broadcasting servers, and even Synfig (a 2D animation program), to mix and edit video.

In fact, it can be said that MLT is the actual video editor, and that Shotcut, Kdenlive, and most other free and open-source video editors are just front ends to it. There even exists a front end to MLT called melt that is essentially a command-line video editor—as fully featured as Shotcut and Kdenlive.

Essentially, a video editor is just a graphical version of melt. It allows the user to arrange clips on a timeline, layer them, and add effects to them in a graphical environment. Then it just generates an XML description of what the user wants and then feeds that directly into melt to render. The only thing that a video editor provides that you wouldn’t get from using melt is an on-the-fly preview of your video. And even then, I’m pretty sure that video editors just use MLT to do that.

This particular fact came in extremely useful later. I decided to try Kdenlive as a new video editor.

Editing

Overall, the experience of editing in Kdenlive was good. The key bottleneck was previewing the video in the editor.

- The high-resolution video has an exceptionally high bit rate. Even VLC can’t play it smoothly.

- Not only must Kdenlive play the video, but it apply the effects in real-time.

These two problems are made worse by the fact that Kdenlive’s rendering process is single-threaded. This seems kind of a waste, since MLT is definitely capable of operating in parallel.

In the following sections, I discuss some of Kdenlive’s features that helped me work around this problem. As the example, I used the segment of the video where I mention traveling in China. I had so many clips of traveling that I wanted to use as B-roll, but there wasn’t enough space. So I created a segment where various clips pan across the scene into a black background. With almost a dozen video clips being transformed and animated, it’s easily the most effects-heavy segment in the entire video. Not only did it cause problems in preview, it also caused many problems in rendering, which are detailed in the section on rendering.

Proxy editing

Since my phone records in full 4K, so the first issue I had was that the video files were too big. Fortunately, Kdenlive has proxy editing, in which Kdenlive creates a temporary low-resolution version of the original clip for display in the editor. When the project renders, it renders from the original, full-resolution clips. The workflow is seamless. But although it makes the video playable, Kdenlive gets really slow in the presence of any video effect—even on still images.

GPU rendering

I also tried the experimental GPU processing feature, enabled by the movit library. It is supposed to give the user a better preview playback experience by using the GPU to render the video effects for the preview. I hope that this feature gets more traction in the future, as it seems promising. The preview displays with a lower frame rate, which is probably just overhead. I only had two problems with it.

- It isn’t stable. But the crashes are infrequent enough that I could still work. Also, some bugs cause clips not to play, or to play the wrong clip.

- Compositing didn’t work. Since

movitis a different framework than MLT, I suspect that the compositing problems are just an issue of going between two different frameworks, since I know that themovitlibrary is capable of proper composting.

Other than that, GPU processing was slightly better than CPU processing, though there is much work to be done.

Preview rendering

Preview rendering is, by far, the smoothest way to view effects. You select a segment of the video, designate it as a preview zone, and click the preview render button. Then, Kdenlive will render that specific segment in advance.

Of course, like the rest of Kdenlive’s rendering, it is single-threaded. And, like the rest of Kdenlive’s rendering, it doesn’t need to be, and parallel processing would greatly improve its performance.

But it works pretty well.

Rendering

After all the work I did, it was finally time to render the final video. Remember that Kdenlive does no video rendering: it merely compiles a description of your video in XML and hands it off to mlt-melt. Fortunately, MLT is fully parallel.

Unfortunately, MLT has a problem with memory. I monitored my system with htop as the video rendered, and I noticed that MLT consumed a lot of memory. This wasn’t surprising, as my clips are enormous. I was a little surprised that it seemed to be loading entire clips into memory. I’m not sure if this is technically necessary: surely it is possible to load the clips from disk in more manageable segments. But what was really a problem was that it never seemed to free memory after it was done with a clip.

Then, it crashed.

First, I dispaired. I imagined the worst—that I had spent a month producing a video, and now no one would ever see it because I couldn’t render it.

After I got that out of the way, I decided to diagnose the problem. Since I was saving as mkv, I could watch the video up to the point where the rendering crashed, and it always crashed right as it was about to render that segment with all the traveling clips (I mentioned it in the Editing section). It was clear that MLT tried to load every one of those video clips into memory. Combined with all the memory it was already using, the linux kernel decided that MLT was a threat to system stability and killed the process.

Since my 8 GB were clearly enough to render most of the video, I decided to render the video in chunks: (1) the travel clip segment, (2) everything before the travel clip segment, and (3) everything after the travel clip segment.

I discovered that, in addition to rendering to a file, Kdenlive can also just directly give you the XML file instead of passing it to MLT. This was useful because then I could just render with the command line. That way I could see MLT’s output in real-time instead of inspecting the log file that Kdenlive writes.

I went out for lunch, and all three clips were rendered. I stitched them together with ffmpeg’s concat command.

Conclusion

I handed over the video, and after a brief exchange and a waiver, the ISU World Languages and Cultures department uploaded it to their YouTube channel. It is available in 4K.

My video editing abilities have been useful at unexpected times in the past. I wonder what I will do with these abilities in the future.